Jenkins Case Study: Graylog

Modernizing Graylog’s DevOps Infrastructure To Support A Complex & Dynamic Platform

By Donald Morton and Alyssa Tong

SUMMARY: For this industry leading log management platform powering tens of thousands of enterprise installations worldwide, their new build and release manager set out to leverage the power and flexibility of Jenkins' latest features to enhance their current DevOps environment.

CHALLENGE: Upgrade Graylog’s Jenkins installation with the latest version to:

-

enable expanded use of infrastructure-as-code

-

ensure quick and straightforward disaster recovery for Jenkins

-

provide visibility for all during the release process

SOLUTION: A flexible and modern DevOps platform for Graylog.

RESULTS:

-

Maintainability of job improved through infrastructure-as-code

-

Upgrade performance moved from never upgraded to upgrades within minutes

-

Observability of builds increased through Blue Ocean UI

-

A scalable infrastructure to support 40,000 enterprise installations and thousands of IT professionals worldwide

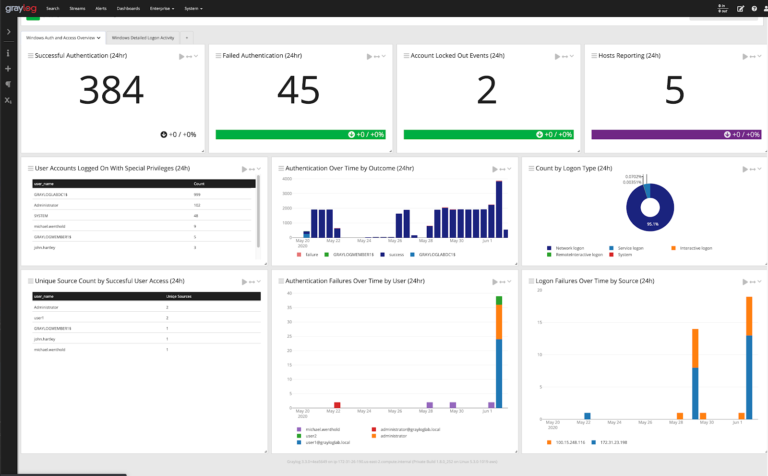

In software development, innovation, agility, and stability are paramount to a company’s success. This is especially true if you are the provider of a centralized log management platform to enterprise clients with over 40,000 installations worldwide in government, fintech education and telecom industries. Graylog has led the pack in creating an industry-leading log management solution that clients can rely on. Their easy-to-use web interface allows users to quickly retrieve desired information stored within millions of log records by conducting a basic search — and it can do all that in milliseconds. Then the service allows you to easily sort the data into useful charts, tables, and trend indicators.

"It gives you the ability to aggregate high volumes of logs from multiple sources into one place, making them easily searchable as well as providing the ability to create dashboards and visualizations," said Donald Morton, Graylog’s Build and Release Engineer. "In the old days, people would just log into a server and read the log files there. But with the advent of the cloud, that’s becoming less and less feasible. There’s just too much data, and there’s a lot of insight to be gained by having it all normalized."

That’s why thousands of IT professionals across the enterprise rely on Graylog’s scalability, full access to complete data, and exceptional user experience to solve security, compliance, operational, and DevOps issues every day. The service has considerably faster analysis speeds, provides a robust and easy-to-use analysis platform, and offers simple administration and infrastructure management. When Morton recently joined Graylog to manage their Jenkins installation, he committed to ensuring that the DevOps platform that Graylog developers and software engineers relied on was as robust and slick as the service the clients were using.

Feature-rich Jenkins provides the power and flexibility needed to amp up infrastructure

"When I joined Graylog this year, it was obvious the infrastructure was well designed," said Morton, "but it also hadn’t been changed much in four years. Graylog’s build system is fairly complex, and I knew they would benefit a lot from the power and flexibility of Jenkins' newest features."

"Jenkins is a bit behind the scenes from the customer’s perspective," he added. "But it enables us to continue to provide a steady stream of new features and bug fixes to the product, which ultimately results in happier customers and happier developers."

Graylog’s Software Architect, Bernd Ahlers, joined Morton to help transition to the new system, utilizing infrastructure-as-code as much as possible. Their immediate goal was to move to a configuration-as-code infrastructure so that management of the build and release cycle would be easier and that, if needed, Jenkins' disaster recovery would be simple and quick.

"Jenkins' flexibility is its real power," said Morton. "When you have complex needs like Graylog does, you need something that can handle anything you can throw at it."

With that in mind, Morton shared his personal objectives for modernizing Graylog’s Jenkins installation:

-

"If it blows up, I want it to be easy to fix. This also translates into being easy to upgrade. That’s less time spent doing stuff, which is money saved."

-

"I want traceability for the whole system. When something breaks, other employees should be able to look in the git history to see what changed recently."

-

"I don’t want to see multiple jobs all doing very similar things, but slightly differently. We’ll use shared libraries for that. That means: less code drift, less complexity, and a whole lot easier to manage."

-

"I want to simplify the release process. It should be one button press, not 2-4 hours of manual tasks."

The final objective, one button press, is not yet achieved, but can be done using Jenkins!

The nuts and bolts of creating visibility and automation 'round the clock

Many of Graylog’s software developers work out of their corporate offices in Hamburg, Germany, and Houston, Texas. But they also have a number of virtual tech employees around the world, making automation and visibility for all, around the clock, an imperative. So how did the team attack this challenge?

First, they created a Terraform module to spin up infrastructure and an Ansible playbook for installing software on the machines. Jenkins itself runs off of a Docker image. The configuration of Jenkins is handled through the JCasC plugin. The freestyle jobs were rewritten in Groovy as declarative pipelines, so they set up the Github Branch Source plugin, allowing Jenkins to auto-create jobs. According to Morton, this reduces even more manual setups.

They now store the Groovy for the pipelines in git, which allows them to back out changes to jobs if something goes wrong. They were also able to deprecate a custom daemon used for handling webhooks, which required manual configuration for each job. The Github Branch Source plugin now handles this automatically and gives the developers "a very nice UI" for looking at their build history.

"Having separate jobs for each branch, PR, and tag makes tracking down what was built way easier," said Morton. "And the fact that Jenkins manages their creation is super nice."

Declarative pipelines allow them to split their builds up into multiple stages and run certain things in parallel, with what Morton calls "a nice flowchart to show the progress." They also now have Prometheus/Grafana pulling metrics from their Jenkins instance. It is recording about 76 builds running per day. Those are 99% snapshot builds, where the team had no visibility previously.

"Upgrades are very simple now," says Morton. "We rebuild the Docker image, and it automatically updates Jenkins to the latest LTS and updates all plugins to the latest versions. It only takes a few minutes. The configuration of Jenkins itself is done through a single YAML file."

Happier developers, impactful results and more modernization on the horizon

Of course, as a new employee, you want to make an impact, and, fortunately, Morton can point to the fact that Graylog’s team of developers seem to be quite pleased with the upgrades to date.

"Overall, it is a very nice system," Morton emphasized. "The developers are happy with the changes they’re seeing, especially because the visibility frees them up to focus on software enhancements and incremental releases with confidence and without needless delays."

"Just this morning," he said, "we caught an issue where Graylog wouldn’t start up after a PR was merged. This was on the same day we were going to create a new release. We have Jenkins set up to deploy snapshot builds to our dev Graylog instance, so obviously, someone noticed when it wasn’t working."

It was a simple issue, easily fixed, but Morton doesn’t want that happening to Graylog customers. "That’s where the ultimate impact would occur," he added.

Graylog is also providing the product in a wide range of formats, including tarballs, deb packages, rpm packages, ova images, ami images, and Docker images. Many of the technology team members have different installation needs, so each one is built uniquely.

The results with this upgrade met their expectations, including ease of maintenance through the use of infrastructure-as-code; system upgrades handled within minutes; and clear visibility and observability of builds with Blue Ocean.

"But," Morton continues, "there’s more good work to do: our next steps are to continue iterating on our declarative pipeline jobs to improve build time and add additional stages for smoke testing. Also on deck is to optimize the release process. It, too, is fairly complex, with many manual steps. I’ve already started working on automating some of that in Jenkins, but it’s not entirely there yet. We’ll keep coding away using Jenkins until the processes are completely optimized and automated."